Introduction

Everybody knows that the league table is bollocks at the start of the season. This is because the sample size is too small to draw any confident conclusions about team strength.

While the outcome of any given football match can be heavily influence by luck, we assume that over time the best teams will drop the fewest points, and rise to the top of the league table, as luck evens out.

The problem is that a 38-game football season is not long enough for luck to completely even out. Mark Taylor has recently estimated that

152 matches would result in a league table that better reflects the true differences in quality between teams.

Obviously that is never going to happen. So, over the course of a season, if we really want to know how good/bad a team has been, we need a way to estimate team strength without relying on the league table.

Team Strength is a Latent Variable

Team strength is an example of what statisticians call a

latent variable, because it is not measured directly; it is inferred from other variables that are measured, such as shots or expected goals (xG).

In my own work, I have mostly used shots on target ratio (SoTR) to infer team strength, but given that team strength is a latent variable, a more appropriate approach might be

principal component analysis (PCA).

In a nutshell, PCA takes a set of variables and transforms them into an equal number of weighted linear combinations (components), such that the first principal component (PC1) summarizes most of the variance in the original data set.

For an example of PCA applied to football data, see Martin Eastwood's excellent article

here.

Using PCA to Estimate Team Strength

Here is how I developed my new PCA-based Team Rating.

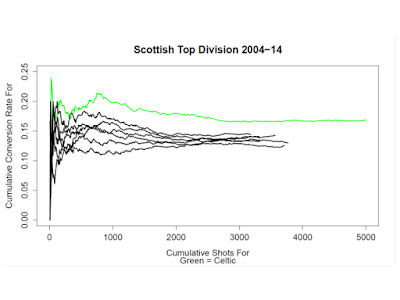

First, I downloaded 10 seasons of Scottish top-flight match data (2004-2014) from football-data.co.uk. Next, I aggregated the data by season and team, resulting in a sample size of 120 team-seasons. Then, I performed a PCA in R (

prcomp function) using the following four variables (scaled to unit variance).

- Total Shots Ratio = Shots For/(Shots For + Shots Against)

- Shots on Target Ratio = SoT For/(SoT For + SoT Against)

- Score Rate = Goals For/SoT For

- Save Rate = 1-(Goals Against/SoT Against)

(Note: Score Rate + Save Rate =

PDO)

PC1 accounted for 56% of the variance (see Appendix), and was strongly associated with the two most

reliable metrics in the data set, TSR and SoTR (loadings > 0.60). Thus, PC1 appears to represent the latent variable team strength.

Interestingly, Score Rate and Save Rate were also positively associated with PC1, but with relatively weak loadings. This is consistent with the view that, although relatively volatile, PDO does contain some information about team strength, just not as much as TSR or SoTR.

Assuming that PC1 presents team strength, a team's PC1

score can be used as a composite rating. Thus, my Team Rating is essentially a weighted linear combination of a team's TSR, SoTR, Score Rate, and Save Rate, with Score Rate and Save Rate having roughly 1/3 the weight of the shot ratios. (James Grayson has developed a similar composite metric, which you can read about

here.)

And there you have it: my new Team Rating.

Explanatory Power and Reliability

The scatter plot above depicts the relationship between my new Team Rating, goal difference, and points per game at the end of the season for the 2004-2014 data set.

As you can see, my new Team Rating has excellent explanatory power, with r^2 values of 94% and 89% for goal difference and points respectively. In contrast, SoTR only explains 80% and 73% of the variance in goal difference and points respectively.

In addition, the line of best fit relating Team Rating to goal difference goes through zero on both axes. This means a team with a rating of 0 at the end of the season is expected to have a goal difference of 0.

Also, the Old Firm seasons in the upper right quadrant of the plot fall out around the same regression line as the rest of the league, which is nice.

The second scatter plot depicts the same relationships as the previous plot, this time using four seasons that were not included in the PCA (I used the

predict function in R to calculate the PC1 scores for the new data). Again, we see the same excellent explanatory power, even when new data were used.

To assess within-season reliability, or "repeatability" of team differences, I examined the correlation between Team Rating in the first half of the season and Team Rating in the second half of the season in the four new data sets.

As you can see from the bar chart, my new Team Rating exhibits a similar level of within-season reliability as TSR or SoTR, and is more reliable than either goal difference or points per game.

Team Rating in Action

The bar chart above depicts the current Team Ratings for the SPFL Premiership. As you can see, Hearts are rated as the 3rd best team, despite currently sitting at the top of the table.

The reason for this discrepancy is that the Jam Tarts' results so far have been heavily influenced by PDO, specifically an unsustainable Score Rate of 0.67. Basically, they've been lucky compared to the other teams in the league, but Hearts are still very good.

Thanks for reading. If you have any questions about my Team Rating, please post a comment below, or you can find me on Twitter @226blog.

Appendix